How to represent a protein sequence

September 27, 2023

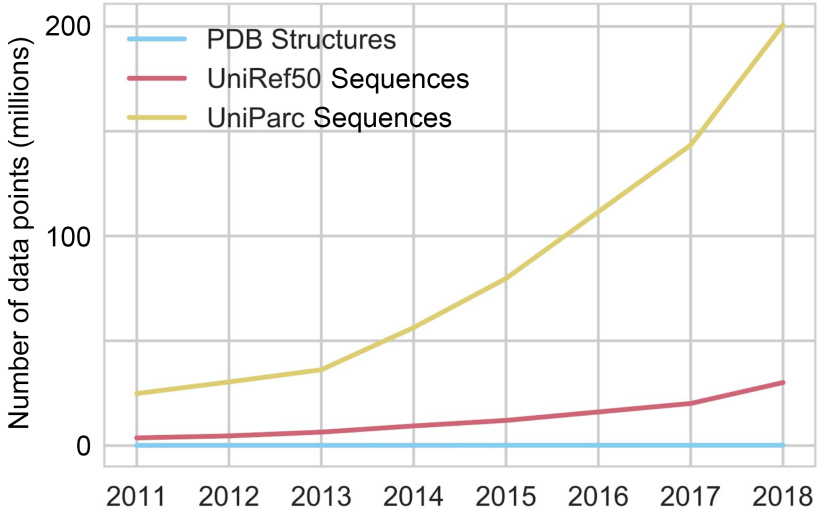

In the last decade, next generation sequencing propelled biology into a new information age. This came with a happy conundrum: we now have many orders of magnitude more protein sequence data than structural or functional data. We uncovered massive tomes written in nature's language, the blueprint of our wondrous biological tapestry, from the budding of a seed to the beating of a heart. But more often than not, we lack the ability to understand them.

An important piece of the puzzle is the ability to predict the structure and function of a protein from its sequence.

Thinking about this as a machine learning problem, structural or functional data are labels. With access to many sequences and their corresponding labels, we can show them to our model and iteratively correct it's predictions based on how closely they match the true labels. This approach is called supervised learning.

When labels are rare, as in our case with proteins, we need to resort to more unsupervised approaches like this:

-

Come up with a vector representation of the protein sequence that captures its important features. The vectors are called contextualized embeddings (I'll refer to them more colloquially as representation vectors). This is no easy task: it's where the heavy lifting happens and will be the subject of this article.

-

Use the representation vectors as input to some supervised learning model. The informative representation has hopefully made this easier that 1) we don't need as much labeled data and 2) the model we use can be simpler, such as linear or logistic regression.

This is referred to as transfer learning: the knowledge learned by the representation (1.) is later transferred to a supervised task (2.).

What about MSAs?

We talked in a previous post about ways to leverage the rich information hidden in Multiple Sequence Alignments (MSAs) – the co-evolutionary data of proteins – to predict structure and function. That problem is easier:

However, those solutions don't work well for proteins that are rare in nature or designed de novo for which we don't have enough co-evolutionary data to make a good MSA.

In those cases, can we still make reasonable predictions based on a single amino acid sequence? Another way to look at the techniques in this article is that they are answers to that question. They pick up where MSAs fail. Moreover, models that don't rely on MSAs aren't limited to a single protein family: they understand some fundamental properties of all proteins. Our goal is to build such a model.

Representation learning

The general problem of converting some data into a vector representation is called representation learning, an important technique in natural language processing (NLP). Let's see how it can be applied to proteins.

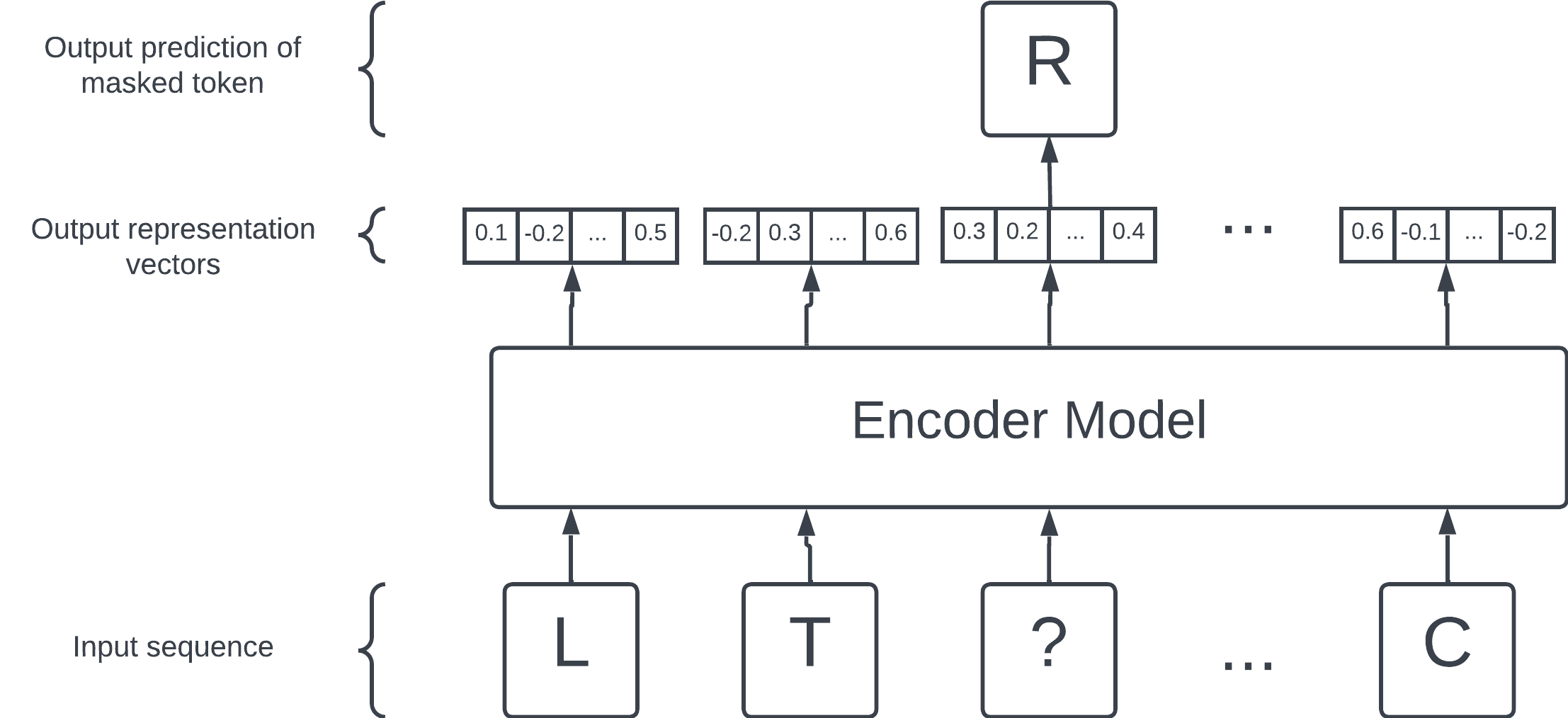

We want a function that takes an amino acid sequence and outputs representation vectors. This function is often called an encoder.

Tokens

In NLP lingo, each amino acid is a token. Similarly, we can embed an English sentence in the same way, using characters as tokens.

As an aside, words are another reasonable choice for tokens in natural language.

Current state-of-the-art language models use something in-between the two: sub-word tokens. tiktoken is the tokenizer used by OpenAI to break sentences down into lists of sub-word tokens.

Context matters

If you are familiar with NLP embedding models like word2vec, the word embedding might be a bit confusing. Vanilla embeddings – like the simplest one-hot encodings or vectors created by word2vec – map each token to a unique vector. They are much easier to create and often serves as input to neural networks, which only understand numbers, not text.

In contrast, our contextualized embedding vectors for each token, as the name suggests, incorporates context from its surrounding tokens. Therefore, two identical tokens don't necessarily have the same embedding vector. These vectors are the output of our neural networks.

(For this reason, I refer to these contextualized embedding vectors as representation vectors – or simply representations – throughout this article.)

If we want one vector that describes the the entire sequence – instead of a vector for each amino acid – we can simply average the values in each vector.

Now, let's work on creating these representation vectors!

Creating a task

Remember, we are constructing these vectors purely from sequences in an unsupervised setting. Without labels, how do we even know if our representation is any good? It would be nice to have some task: an objective that our model can work towards, along with a scoring function telling us how it's doing.

Let's come up with a task: given the sequence with some random positions masked away

which amino acids should go in the masked positions?

We know the ground truth label from the original sequence, which we can use to guide the model like we would in supervised learning. Presumably, if our model becomes good at predicting the masked amino acids, it must have learned something meaningful about the intricate dynamics within the protein.

This lets us take advantage of the wealth of known sequences (from publicly available large databases such as the Protein Data Bank (PDB)), each of which is now a labeled training example. In NLP, this approach is called masked language modeling (MLM), a form of self-supervised learning.

Though we will focus on masked language modeling in this article, another way to construct this self-supervision task is via causal language modeling: given some tokens, ask the model to predict the next one. This is the approach used in OpenAI's GPT.

The model

(This section requires some basic knowledge of deep learning. If you are new to deep learning, I can't recommend enough Andrej Karpathy's YouTube series on NLP, which covers the foundations of deep learning and builds to cutting-edge models like GPT.)

The first protein language encoder model of this kind is UniRep (universal representation), which used a technique called Long Short Term Memory (LSTM). (It uses the causal instead of masked language modeling objective, predicting amino acids from left to right.)

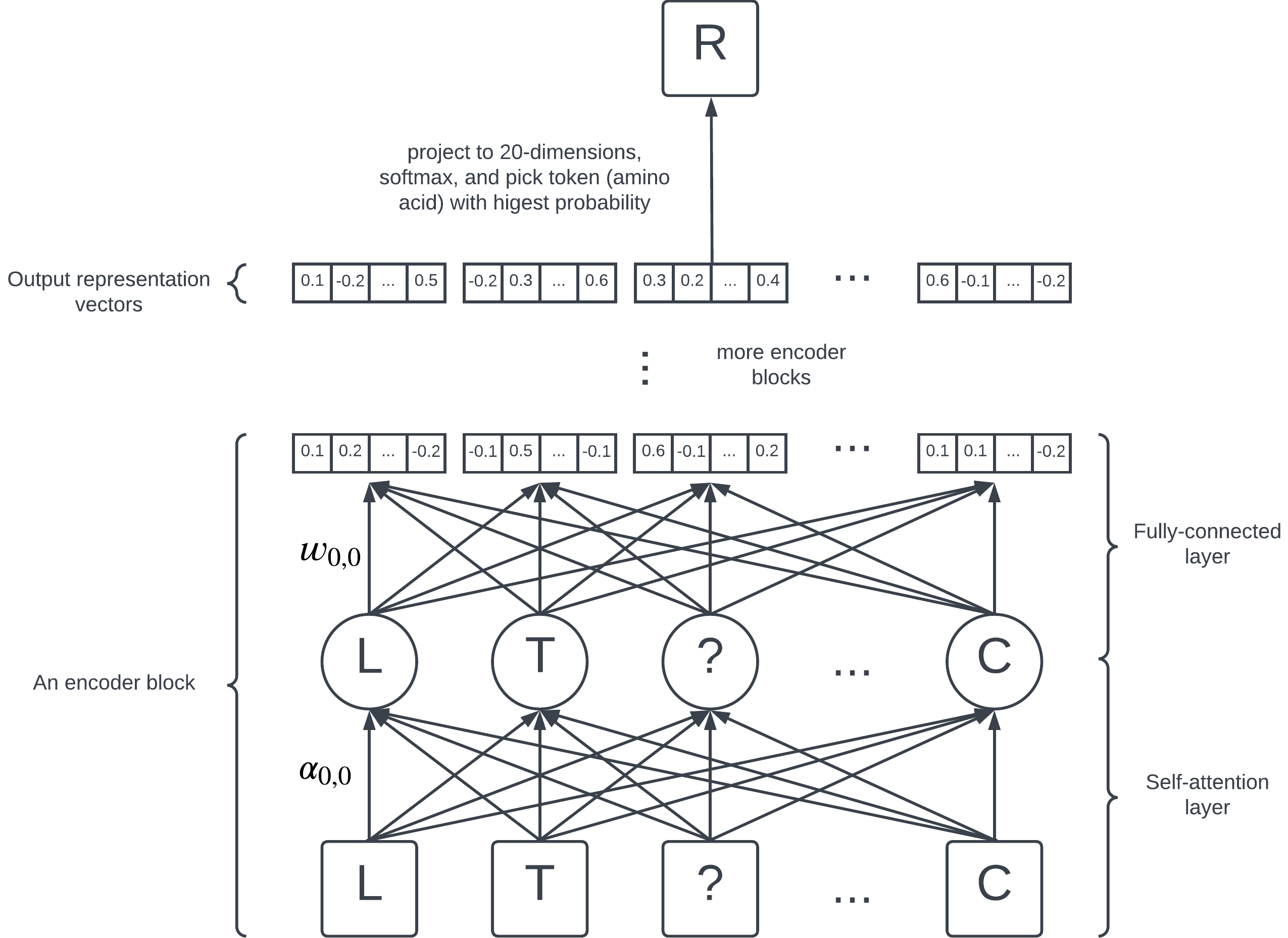

More recently, transformer models that rely on a mechanism called self- attention have taken the spotlight. BERT stands for Bidirectional Encoder Representations from Transformer and is a class of state-of-the-art natural language encoders developed at Google. We will talk in some detail about a BERT-like encoder model applied to proteins.

BERT consists of 12 encoder blocks, each containing a self-attention layer and a fully-connected layer. On the highest level, they are just a collection of numbers (parameters) learned by the model; each edge in the diagram represents a parameter.

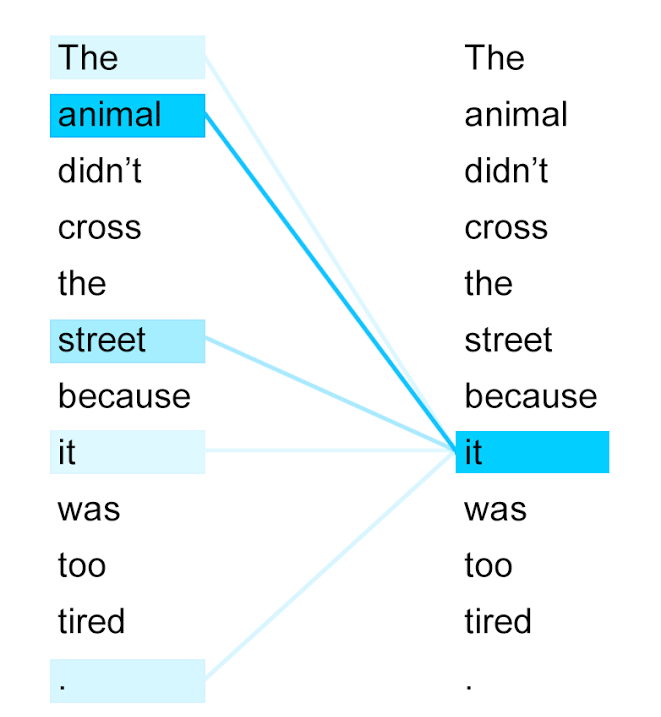

Roughly speaking, the parameters in the self-attention layer, also known as attention scores, capture the alignment, or similarity, between two amino acids. If is large, we say that the token attends to the token. Intuitively, each token can attend to different parts of the sequence, focusing on what's relevant to it and glancing over what's not.

Here's an example of attention scores of a transformer trained on a word-tokenized sentence:

The token "it" attends strongly the token "animal" because of their close relationship – they refer to the same thing – whereas most other tokens are ignored. It is our goal to tease out similar semantic relationships between amino acids.

The details of how these attention scores are calculated are explained and visualized in Jay Alammar's amazing post The Illustrated Transformer. Here's a helpful explanation on how they differ from the weights in the fully-connected layer.

As it turns out, once we train our model on the masked language modeling objective, the output vectors in the final layers become useful encodings of the underlying sequence – exactly the representation we've set out to build.

There are more details

I hoped to convey some basic intuition about self-attention and masked language modeling and have omitted many details. There's a short list:

-

The attention computations are usually repeated many times independently and in parallel. Each layer in the neural net contains sets of attention scores, i.e. attention heads ( in BERT). The attention scores from the different heads are combined via a learned linear projection.

-

The tokens first need to be converted into vectors before they can be processed by the neural net.

- For this we use a vanilla embedding of amino acids – like one-hot encoding – not to be confused with our output representations often called contextualized embeddings.

- This input embedding contains a few other pieces of information, such as the positions of each amino acid within the sequence.

-

Following the original Transformer, BERT uses layer normalization, a technique that makes training deep neural nets easier.

-

There are 2 fully-connected layers in each encoder block, instead of the 1 shown in diagram above.

Using the representation

Once we have our representation vectors, we can train simple models like logistic regression with our vectors as input. This is the approach used in ESM, achieving state-of-the-art performance on predictions of 3D contacts and mutation effects. We can think of the logistic regression model as merely teasing out the information already contained in the input representation, an easy task.

If you are interested in these results, check out these papers from the ESM team.

A peek into the black box

We've been talking a lot about all this "information" learned by our representation. What exactly does it look like?

UniRep

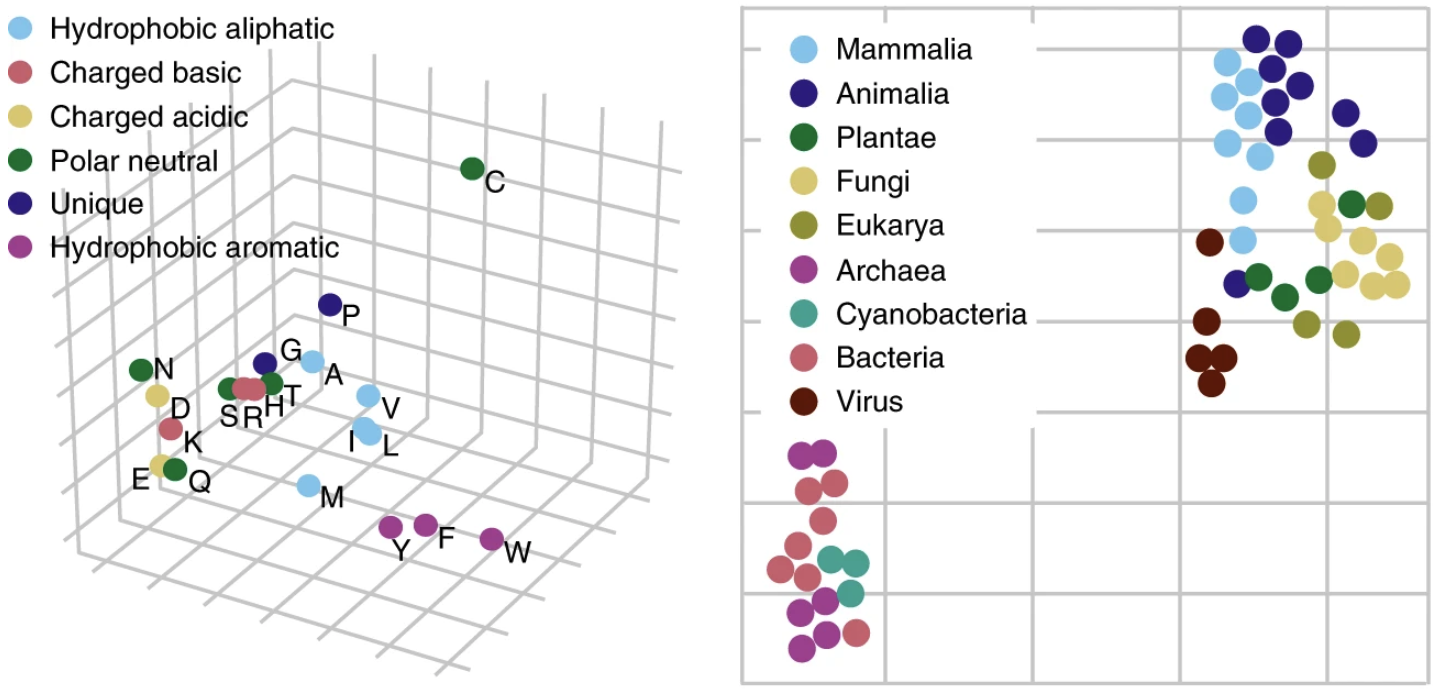

UniRep vectors capture biochemical properties of amino acids and phylogeny in sequences from different organisms.

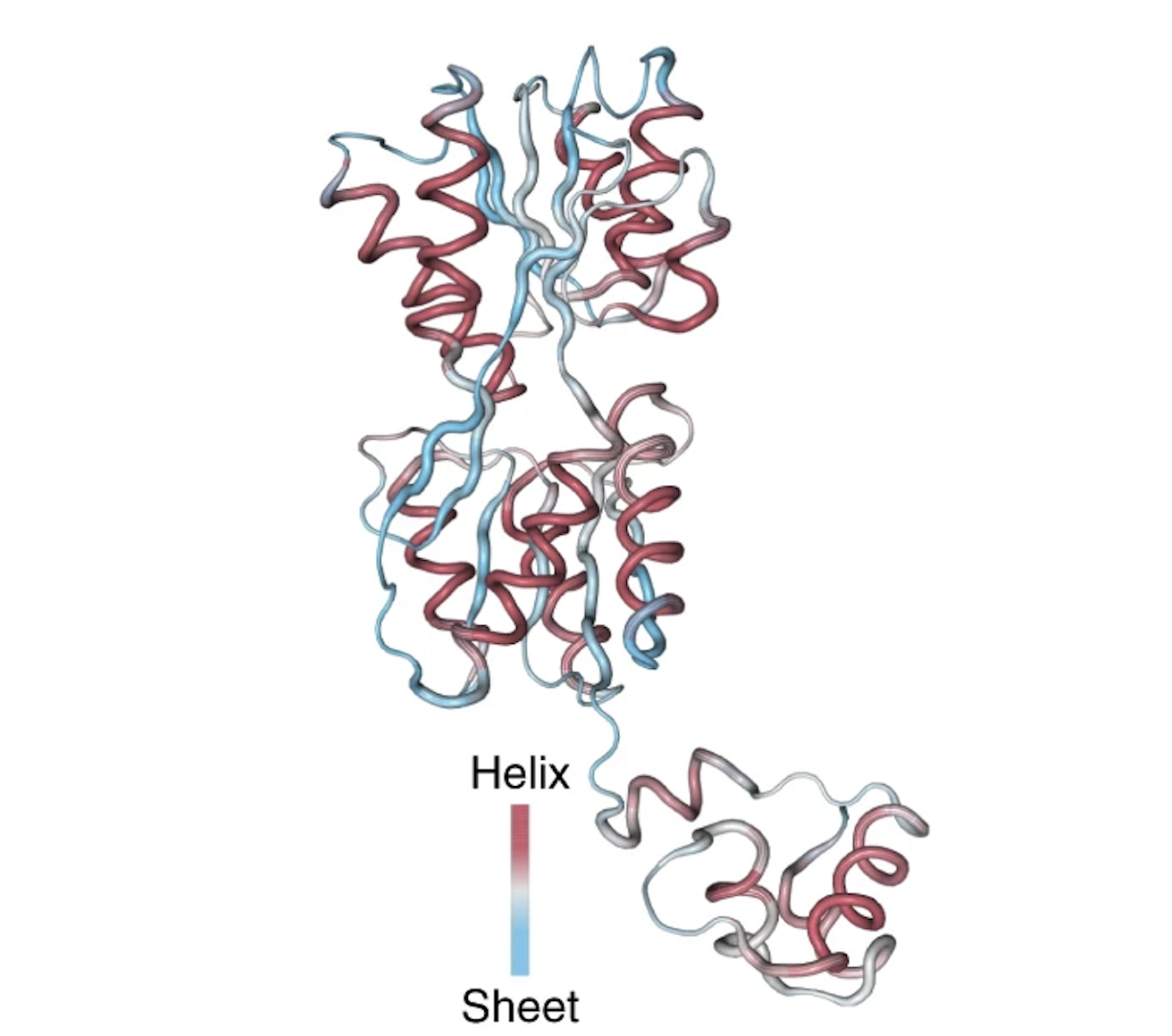

More incredibly, one of the neurons in UniRep's LSTM network showed firing patterns highly correlated with the secondary structure of the protein: alpha helices and beta sheets. UniRep has clearly learned something meaningful about the protein's folded structure.

Transformer models

In NLP, the attention scores in Transformer models tend to relate to the semantic structure of sentences. Does attention in our protein language models also capture something meaningful?

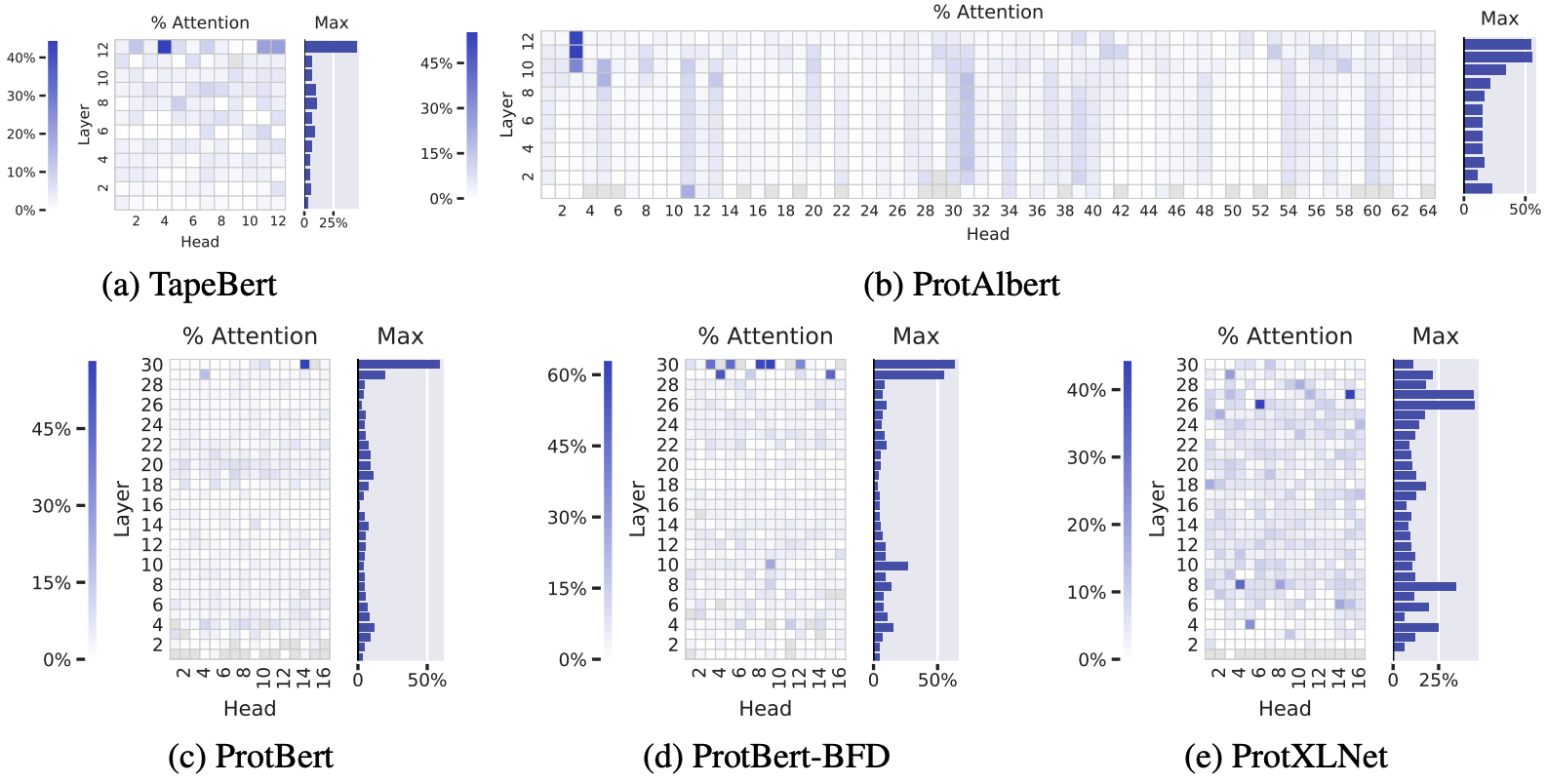

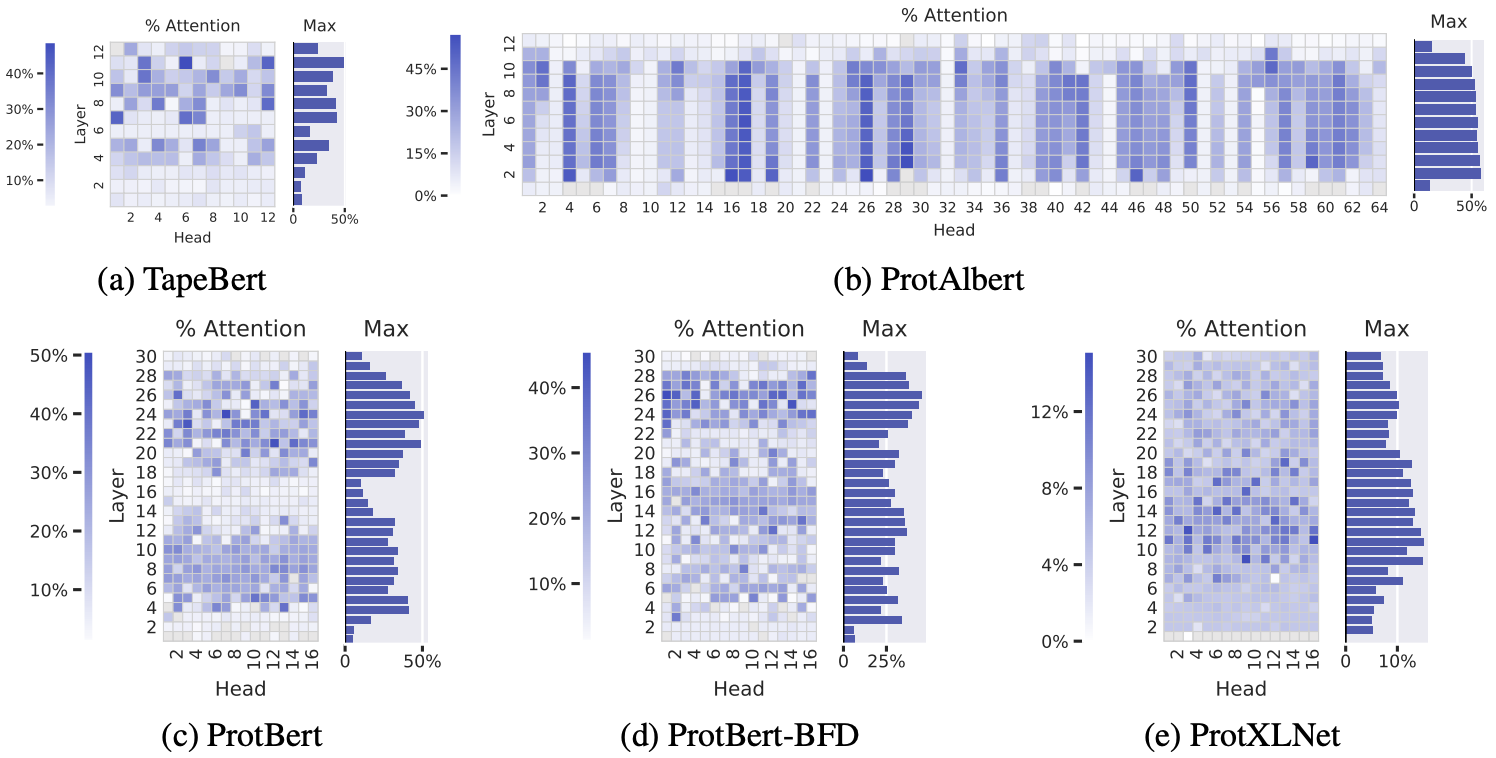

Let's look at 5 unsupervised Transformer models on proteins sequences – all trained in the same BERT-inspired way we described. Amino acid pairs that with high attention scores are more often in 3D contact in the folded structure, especially in the deeper layers.

Similarly, a lot of attention is directed to binding sites – the functionally most important regions of a protein – throughout the layers.

Applying supervised learning to attention scores – instead of output representations – also achieves astonishing performance in contact prediction. Compared to GREMLIN[https://openseq.org/], an MSA-based method similar to the one we talked about in the previous post, logistic regression trained on ESM's attention scores yielded better performance after only seeing 20 (!) labeled training examples.